In the rapidly evolving world of artificial intelligence, Large Language Models (LLMs) have emerged as a transformative force. These sophisticated AI systems can generate human-like text, translate languages, and even produce creative content. However, despite their impressive capabilities, LLMs often struggle with maintaining factual accuracy and providing in-depth, up-to-date information. This is where Retrieval-Augmented Generation (RAG) comes into play, offering a game-changing solution that’s set to redefine the future of AI.

What is Retrieval-Augmented Generation?

Retrieval-Augmented Generation is an innovative AI technique that combines the power of LLMs with vast external knowledge sources. By bridging this gap, RAG enables AI systems to deliver more accurate, contextually relevant, and trustworthy responses.

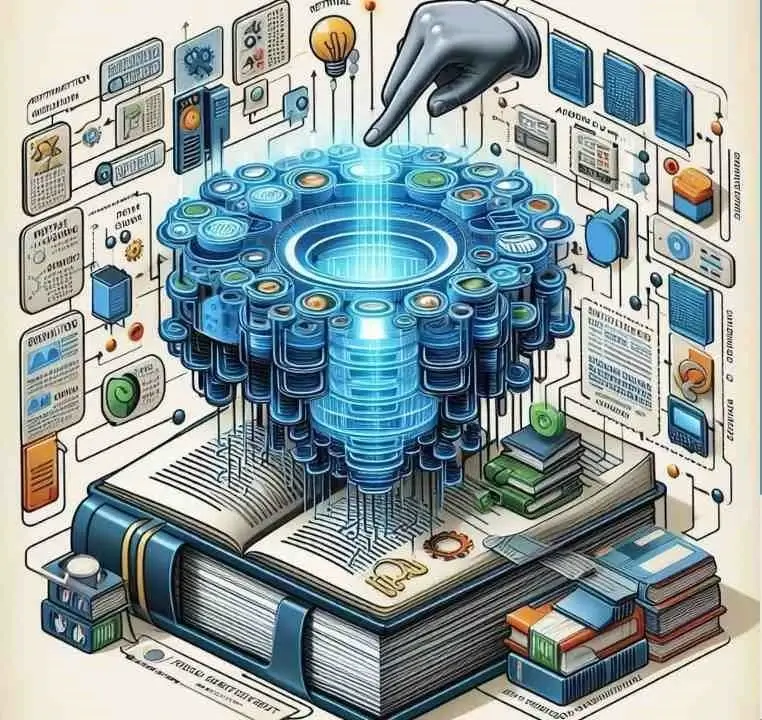

How RAG Works: The AI Librarian Analogy

To understand RAG, imagine a brilliant researcher (the LLM) working alongside a skilled librarian (the RAG system):

- The Retriever (Librarian): This component scans extensive knowledge bases, much like a librarian searching through countless books and digital resources.

- The Large Language Model (Researcher): The LLM, with its powerful text generation capabilities, acts as the researcher ready to utilize the gathered information.

- The Callback Handler (Research Assistant): This element serves as the bridge, efficiently delivering the retrieved information to the LLM for processing.

The RAG Process in Action

- User Query: A user submits a question or prompt to the AI system.

- Information Retrieval: The retriever searches its knowledge base for relevant data.

- Data Integration: The callback handler feeds this information to the LLM.

- Response Generation: The LLM processes the query and retrieved data to craft a comprehensive response.

- Output: The system delivers an informed, accurate answer to the user.

Key Advantages of RAG Technology

RAG offers several significant benefits over traditional LLMs:

1. Enhanced Accuracy and Reliability

By grounding responses in real-world data, RAG significantly reduces the risk of factual errors and “hallucinations” (invented information) that can plague standalone LLMs.

2. Improved Contextual Understanding

RAG enables AI systems to grasp the nuances of complex queries, resulting in more relevant and insightful responses.

3. Increased Transparency and Trust

With the ability to cite sources for provided information, RAG fosters user trust and enables easy fact-checking.

4. Flexibility and Scalability

New information sources can be seamlessly integrated into RAG systems, ensuring that responses remain current and adaptable to changing knowledge landscapes.

5. Efficient Implementation

RAG can be implemented with minimal coding, making it accessible to a wide range of developers and organizations.

Real-World Applications of RAG

The potential applications for RAG technology are vast and growing:

- Advanced Chatbots and Virtual Assistants: RAG can power more intelligent, informative digital assistants capable of handling complex queries across various domains.

- Enhanced Search Engines: By combining the power of traditional search with natural language understanding, RAG could revolutionize how we find and interact with information online.

- Intelligent Content Creation: RAG can assist in generating well-researched articles, reports, and other content, streamlining the writing process while ensuring accuracy.

- Educational Platforms: Interactive learning systems powered by RAG could offer personalized, in-depth explanations on a wide range of subjects.

- Healthcare and Medical Research: RAG could assist medical professionals by providing up-to-date information on treatments, drug interactions, and recent studies.

- Legal and Compliance: In fields where accuracy is paramount, RAG can help professionals navigate complex regulations and case law.

Challenges and Future Developments

While RAG represents a significant advancement, there are still challenges to address:

- Data Quality and Bias: Ensuring the accuracy and neutrality of knowledge sources is crucial.

- Real-time Updates: Developing systems that can quickly incorporate new information as it becomes available.

- Computational Efficiency: Optimizing the retrieval process to maintain speed and performance at scale.

- Ethical Considerations: Addressing privacy concerns and potential misuse of powerful AI systems.

The Future of AI with RAG

As research in RAG technology progresses, we can expect to see:

- More sophisticated retrieval mechanisms, possibly incorporating multi-modal data (text, images, video).

- Improved integration with specialized knowledge bases for domain-specific applications.

- Enhanced ability to reason over retrieved information, leading to more insightful AI interactions.

- Greater customization options, allowing users to tailor RAG systems to their specific needs and knowledge sources.

Conclusion: RAG as a Catalyst for AI Evolution

Retrieval-Augmented Generation represents a significant leap forward in the quest for more capable, trustworthy, and useful AI systems. By combining the creative power of LLMs with the vast wealth of human knowledge, RAG is paving the way for AI that can truly augment human intelligence across a wide range of fields.

As this technology continues to evolve, it promises to unlock new possibilities in how we interact with information, solve complex problems, and push the boundaries of what’s possible with artificial intelligence. The future of AI, enhanced by RAG, is not just more intelligent—it’s more informed, more reliable, and more aligned with human needs than ever before.